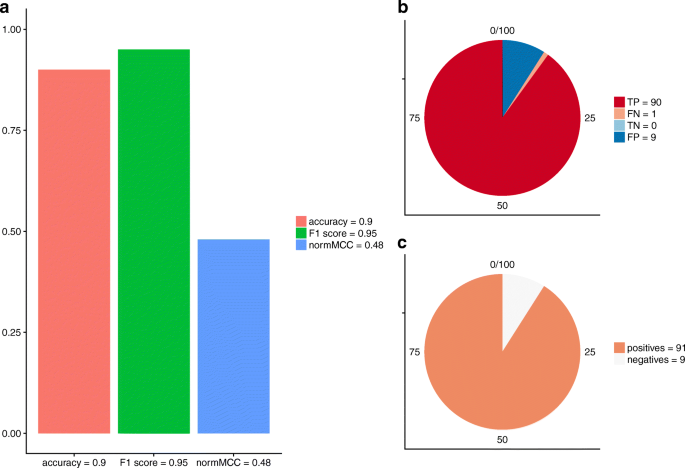

The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation | BMC Genomics | Full Text

Hilab System versus Sysmex XE-2100 accuracy, specificity, sensitivity,... | Download Scientific Diagram

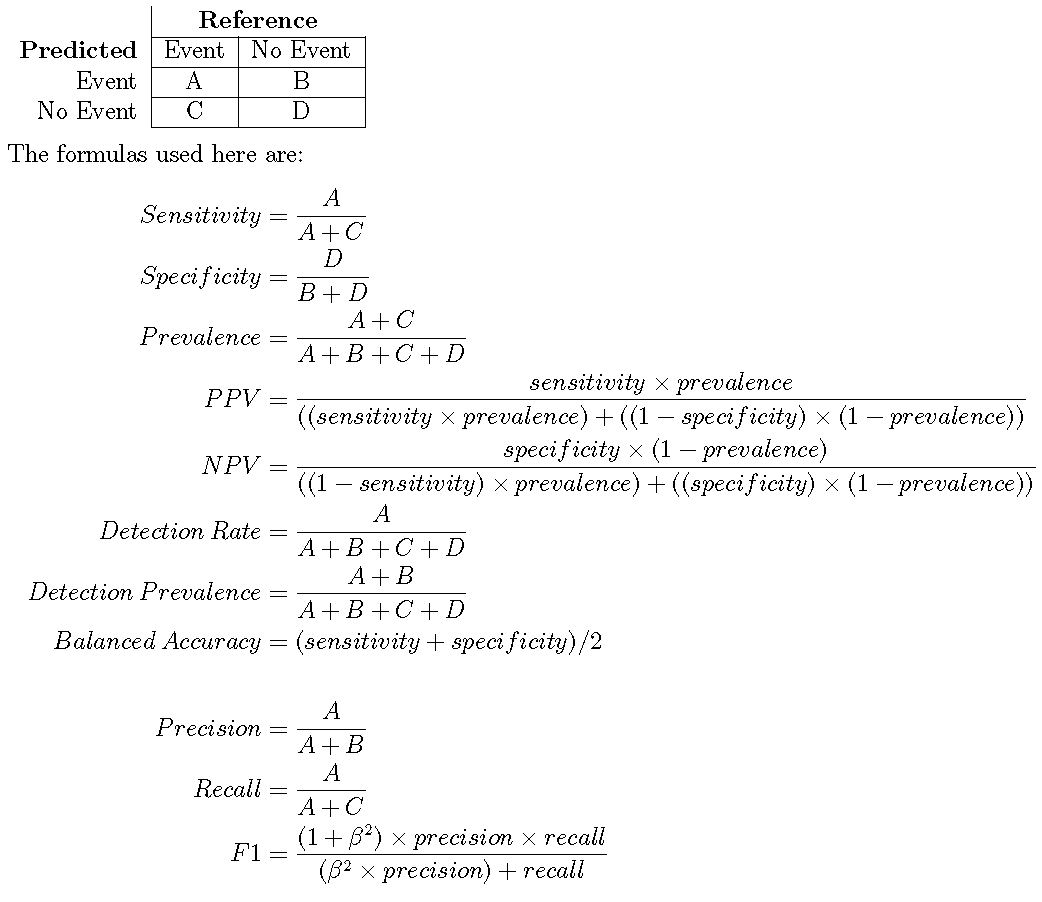

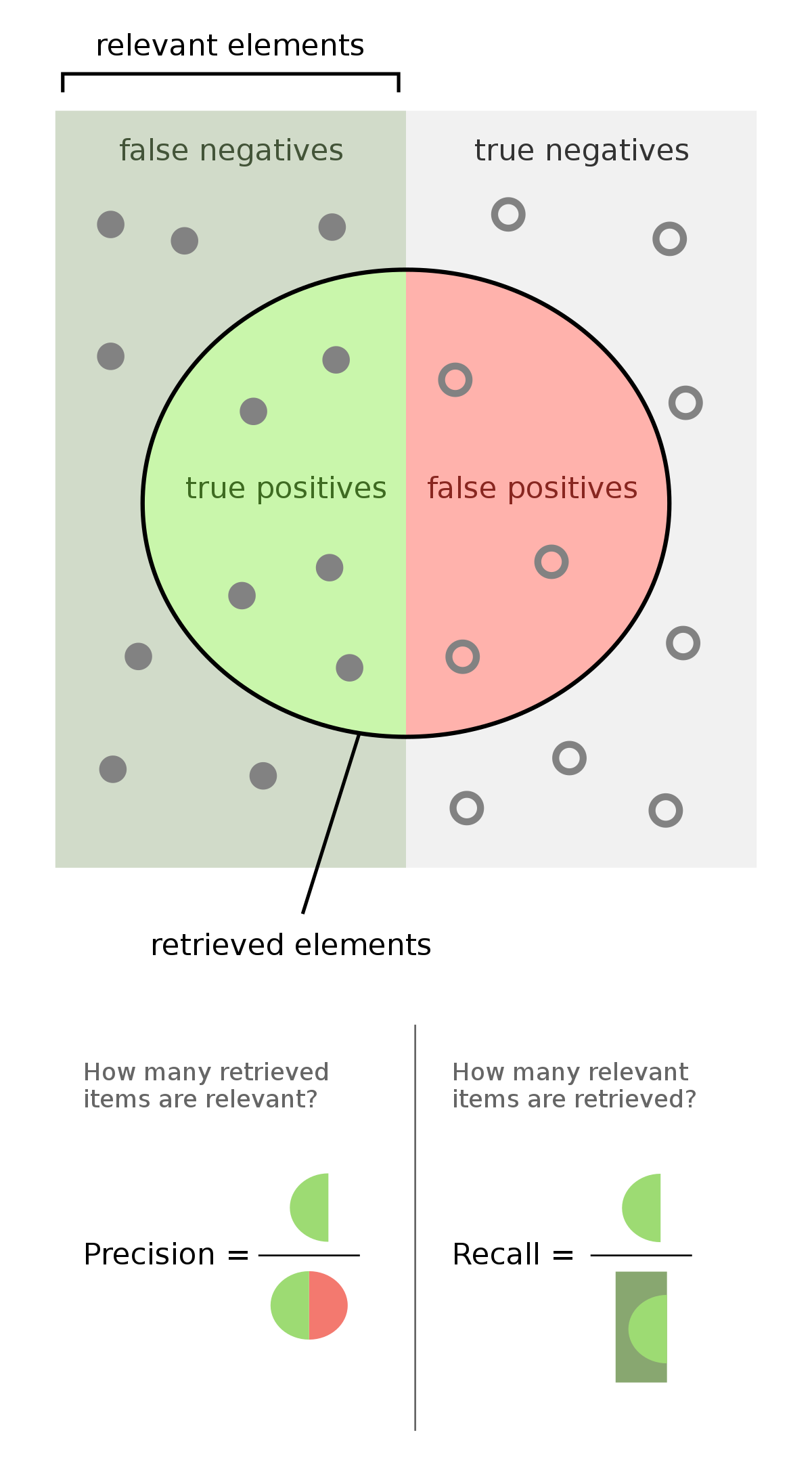

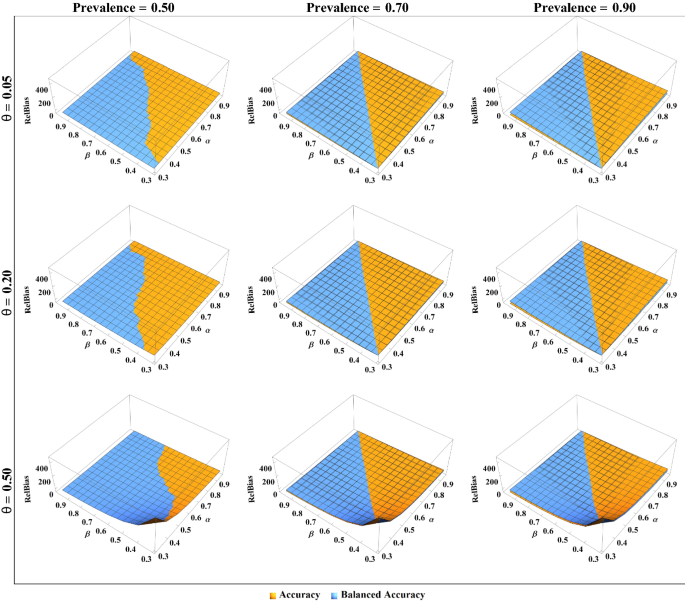

Fair evaluation of classifier predictive performance based on binary confusion matrix | Computational Statistics

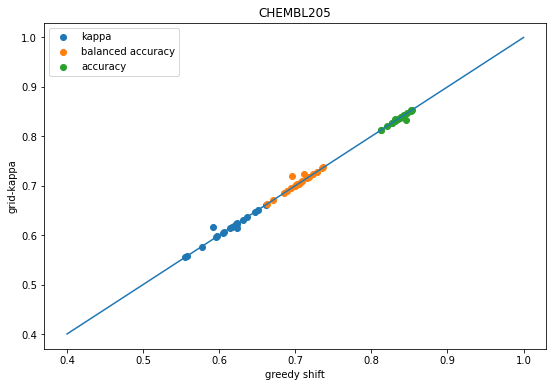

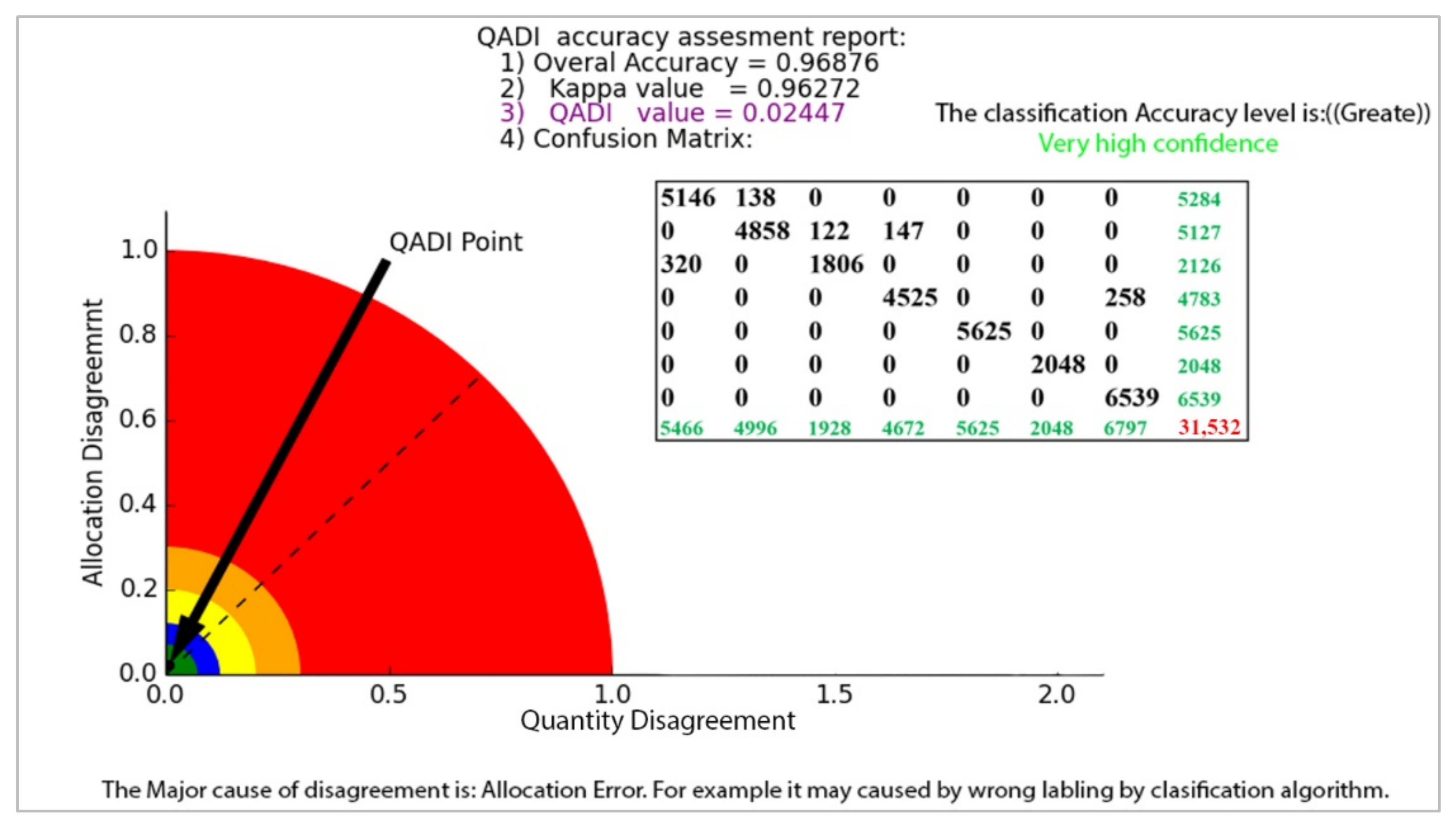

Sensors | Free Full-Text | QADI as a New Method and Alternative to Kappa for Accuracy Assessment of Remote Sensing-Based Image Classification

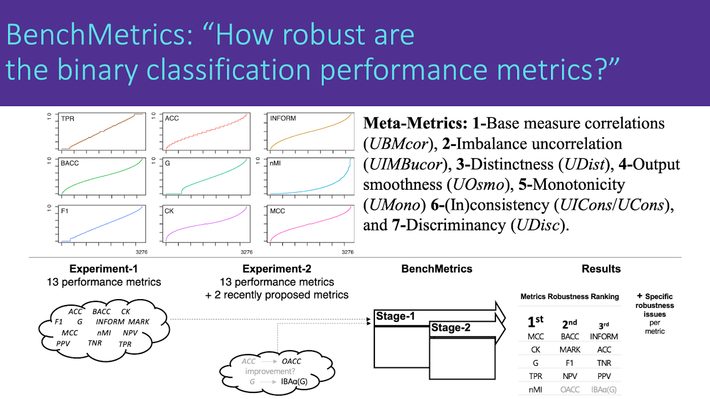

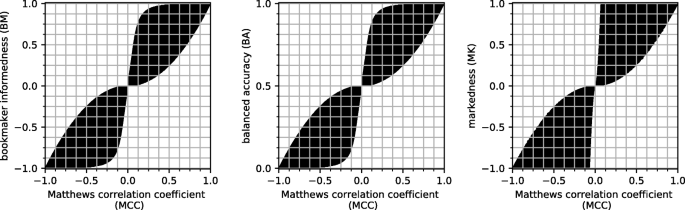

The Matthews correlation coefficient (MCC) is more reliable than balanced accuracy, bookmaker informedness, and markedness in two-class confusion matrix evaluation | BioData Mining | Full Text

Per-continent, box plots of the performance metrics (Balanced Accuracy... | Download Scientific Diagram